Unraveling Oral Communication from Brain Signals - A Milestone in Brain-Machine Communication Technologies

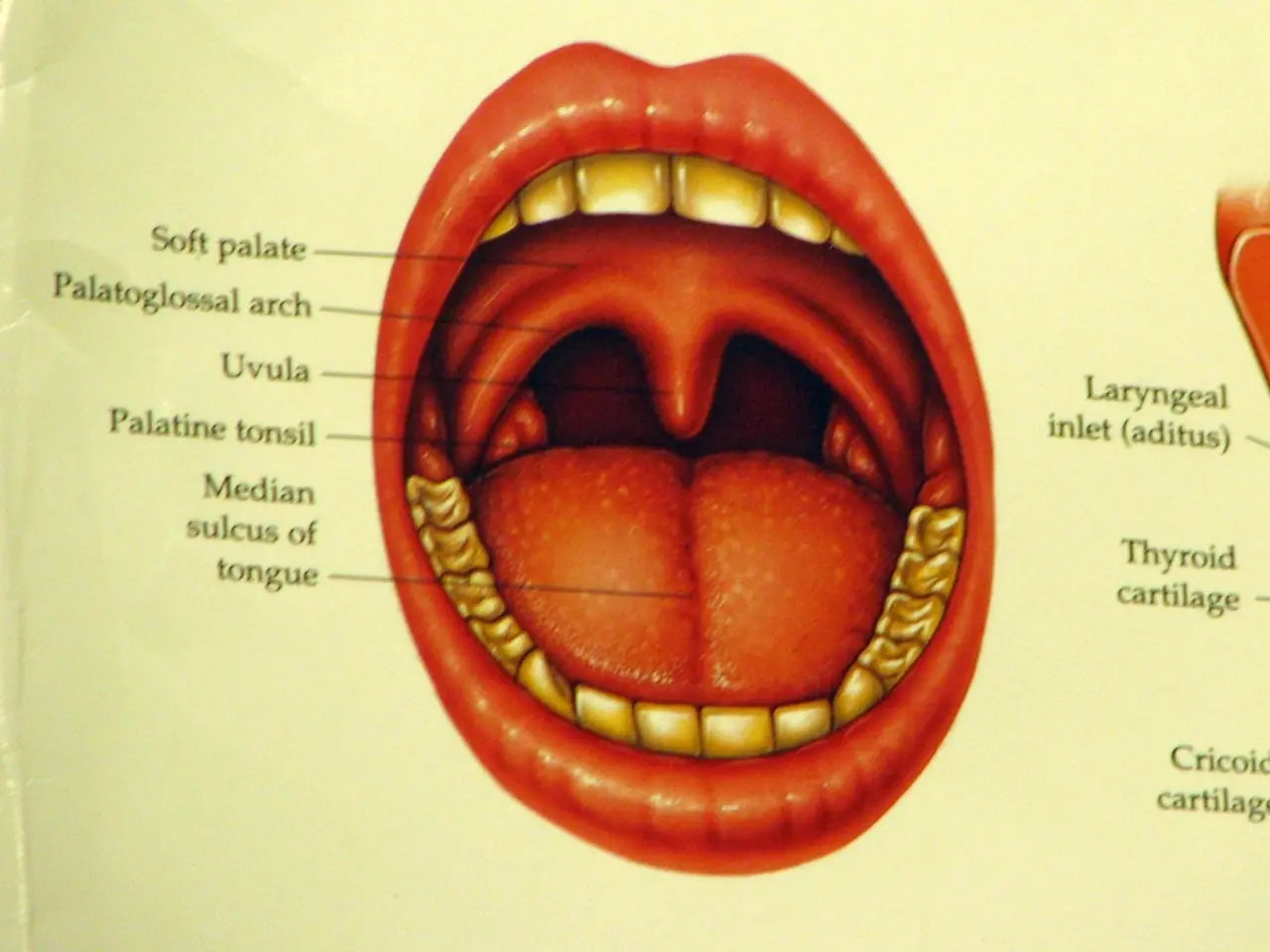

A recent study published on the preprint server arXiv presents an innovative deep learning model that aims to restore communication abilities for patients who have lost the capacity to speak due to neurological conditions. The model, titled "DMF2Mel: A Dynamic Multiscale Fusion Network for EEG-Driven Speech Decoding," achieves speech decoding from non-invasive brain recordings, marking a significant advancement in the field.

### Key Innovations in the Model

The model introduces a novel architecture, the Dynamic Multiscale Fusion Network (DMF2Mel), which combines multiple advanced components to improve speech decoding from Electroencephalography (EEG) signals. The focus is on continuous imagined speech over long durations rather than just discrete words or letters.

One of the key components of the DMF2Mel model is the Dynamic Contrastive Feature Aggregation Module (DC-FAM), which effectively separates speech-related "foreground features" from noisy "background features." This selective enhancement suppresses interference and boosts the representation of transient neural signals related to speech.

Another significant component is the Hierarchical Attention-Guided Multi-Scale Network (HAMS-Net), based on a U-Net framework. HAMS-Net fuses high-level semantic information with low-level details across multiple scales, improving the fine-grained reconstruction of speech features.

The SplineMap Attention Mechanism, which incorporates the Adaptive Gated Kolmogorov-Arnold Network (AGKAN), enhances feature extraction from EEG signals by combining global context modeling with spline-based local fitting for better localized attention.

Lastly, the Bidirectional State Space Module (convMamba) captures long-range temporal dependencies efficiently with linear complexity, while improving modeling of nonlinear dynamics important for representing complex EEG speech signals.

### Performance and Comparison to Previous Attempts

The DMF2Mel model demonstrates significant performance gains in reconstructing continuous speech features from non-invasive recordings, which has been a persistent challenge. Compared to baseline models, the model achieved a Pearson correlation coefficient of 0.074 for mel spectrogram reconstruction in known subjects, a 48% improvement. For unknown subjects, the correlation is 0.048, a 35% improvement over baseline.

In comparison to alternative methods like traditional linear and non-linear stimulus reconstruction models used in auditory attention decoding (AAD), which focus mainly on envelope tracking and often employ two-stage approaches, DMF2Mel is more end-to-end and tailored specifically to reconstruct detailed mel spectrograms, capturing richer speech features than just envelopes.

### Implications and Future Prospects

The potential exists for speech-decoding algorithms to help patients with neurological conditions communicate fluently, using EEG and Magnetoencephalography (MEG) sensors instead of surgically implanted electrodes. This progress opens promising avenues for future brain-computer interface applications, such as neuro-steered hearing aids and speech communication devices for disabled individuals relying on non-invasive brain monitoring.

Every year, thousands of people lose the ability to speak due to brain injuries, strokes, ALS, and other neurological conditions. Being unable to communicate can significantly diminish a person's quality of life and take away their autonomy and dignity. This study represents a milestone at the intersection of neuroscience and artificial intelligence, pushing the boundaries of what is possible in decoding speech from non-invasive brain signals.

The model achieved 44% top accuracy in identifying individual words from MEG signals, a significant milestone in decoding speech from non-invasive brain recordings. If successful, the technology may one day help restore natural communication abilities to patients suffering from neurological conditions and speech loss.

The model was trained on public datasets comprising 15,000 hours of speech data from 169 participants, and it demonstrates impressive zero-shot decoding ability on new unseen sentences. Advanced AI could synthesize words and sentences on the fly, giving a voice to the voiceless. Hearing their own voice express unique thoughts and sentiments could help restore identity and autonomy to patients, improving social interaction, emotional health, and quality of life.

This article does not contain any advertisements.

Science and health-and-wellness intersect in a significant way as a recent study presents an innovative deep learning model, "DMF2Mel: A Dynamic Multiscale Fusion Network for EEG-Driven Speech Decoding," which aims to restore communication abilities for patients with neurological conditions. This model uses artificial intelligence, technology, and medical-conditions physics to decode speech from non-invasive brain recordings, demonstrating impressive zero-shot decoding ability on new unseen sentences and potentially revolutionizing the field of neuroscience.